I was really looking forward to the Sakai conference in Atlanta this year because with my recent involvement with Blackboard as the Sakai Chief Strategist it was the first time since 2007 that my non-academic work life was nearly 100% focused on Sakai. In order to achieve what I plan to achieve in my role at Blackboard, Sakai needs to be a success and I needed to find a way to have Blackboard a part of that success in a manner that is supportive to the community. So I am once again back in the middle of all things Sakai.

The State of the Sakai CLE

The Sakai Technical Coordination Committee (TCC) is now two years old having been formed in June of 2010. The formation of the TCC was a Magna Carta moment where those working on the CLE asserted that they controlled the direction of the CLE and not the Sakai Foundation Board of directors. Now that the TCC is two years old, the culture of the Sakai community has completely changed and the TCC is very comfortable in its Sakai CLE leadership role.

This was evidenced in the pre-conference meeting, several talks throughout the conference, and most strikingly in the day-long Sakai CLE planning meeting on the Thursday after the conference. The TCC has 13 members but there were over 40 people in the (very warm) room. The TCC is a membership body but does all of its work in public on the Developers List and the TCC list. TCC meetings are also open to anyone to attend and contribute.

The goal of the planning meeting is to agree on a roadmap, scope and timeframe for the Sakai 2.9 Release as well as a general scope for Sakai 2.10.

The agenda was very long but the group moved quickly through each item having the right kinds of conversations about issues balancing the need to have a complete and yet solid 2.9 release in a timely manner (Mid-Fall 2012 hopefully). The meeting was led by the current TCC chair Aaron Zeckoski of Unicon. We had the right amount of discussion on each item and then moved on to the next topic to make sure we covered the entire agenda.

I was particularly interested in figuring out items that I could accelerate by using Blackboard funds and resources. But I wanted to make sure that we had community buy-in on the items before I set off to find resources. I was quite happy that we will include the new skin that came from Rutgers, LongSight, and University of Michigan. We decided to put the new skin into Beta-6 but after the meeting decided to move it to Beta-7 because there was so many little things in Beta-6. Most of the Sakai 2.9 decisions were carefully viewed through a lens of delaying the release as little as possible.

The Coming Golden Age of the Sakai CLE

To me the biggest problem that the Sakai community faces (OAE and CLE) is that the CLE is incomplete and as such is weak in competitive situations when facing products like Canvas, Moodle, Desire2Learn, eCollege or Blackboard. From its inception, Sakai has been more of a Course Management System than a Learning Management System. Sakai 2.x through Sakai 2.8 is incomplete because it lacks a structured learning content system like Moodle Activities, Blackboard Content, ANGEL Lessons. etc. This is a feature that can create a structure of learning activities that include HTML content, quizzes, threaded discussions, and other learning objects. These structured content features have selective release capabilities as well as expansion points.

The IMS Common Cartridge specification provides a way to import and export the most common elements in these structured content areas and move learning content in a portable manner between LMS systems. Sakai 2.8 (and earlier) simply did not have any tool/capability that could import a cartridge that included a hierarchy of learning objects. Melete (not in core) could import a hierarchy of HTML content, and Resources can import a hierarchy of files but nothing could import a Common Cartridge and that meant that Sakai 2.8 was missing essential functionality that every LMS with significant market share had.

Other efforts like Learning Path from LOI, Sousa from Nolaria, OpenSyllabus from HEC Montreal went down the path of building hierarchal structures beyond Melete and Resources, but never got to the point where they were full-featured enough to become core tools and put Sakai on equal footing with the structured content offerings from other LMS systems with real market share (i.e. the Sakai’s competitors).

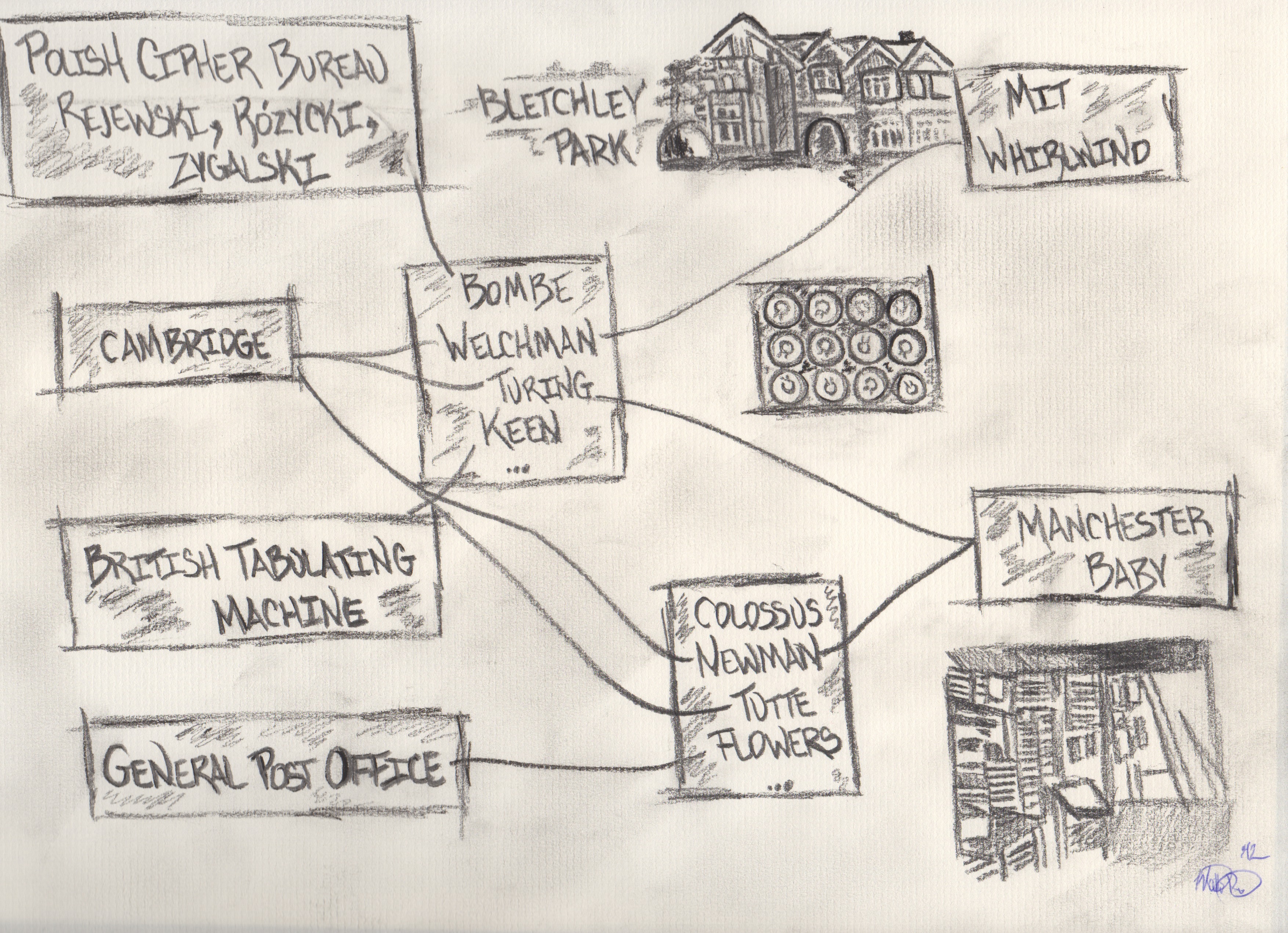

That all changed in the summer of 2010 when Chuck Hedrick and Eric Jeney of Rutgers University decided to build Lesson Builder (now called Lessons) for Sakai. Instead of building Lessons on a design of their own making, they started with a competitive analysis of the other LMS systems in the marketplace to determine the core features of Lessons. This alignment with the other LMS systems in the marketplace also perfectly aligned Lessons with IMS Common Cartridge.

Chuck and Eric built Lessons aggressively and deployed it at Rutgers as it was being built and took faculty and staff input as well as input from others in the community who grabbed early versions of Lessons and ran them in production at their schools. In Early 2011 we decided Lessons was mature enough to be part of the Sakai 2.9 release and later in the year,I added support for IMS Learning Tools Interoperability so that Lessons could be certified as able to import IMS Common Cartridge 1.1.

Even though Sakai 2.9 stalled in early 2012 for lack of QA resources, a number schools put the 2.9 Beta version with Lessons into production because they had a painful need for the Lessons capability. The great news is that Lessons has held up well both in terms of functionality and performance in those early deployments. All of that production testing will help insure that Sakai 2.9 is solid.

I suggest that Sakai 2.9 with Lessons will trigger a Golden Age of the Sakai CLE. In a way I am completely amazed at how well the Sakai CLE through 2.8 has fared in the marketplace without the Lessons capability. Sakai has taken business from Blackboard Learn, WebCT, Moodle, ANGEL, and others without having the Lessons capability – a feature that many consider essential. I shudder to think how much market share we would have at this point if the Sakai CLE had Lessons in 2006 when Blackboard purchased WebCT. I spent a lot of time talking to WebCT schools and they loved Sakai except for its lack of structured content. So we left a lot of that market share in 2006-2007 on the table.

I honestly don’t think that the primary purpose of an open source community like Sakai is to get more market share – but it is a nice measure of the value of the software and community that you produce. Commercial vendors like rSmart, LongSight, Unicon, Edia, Samoo, and now Blackboard use Sakai to meet the needs of customers for whom Sakai is a good fit and good value. We can be proud of the aggregate market share of both the direct adopters of the community edition and the customers of the commercial providers of Sakai.

The LMS market in North America is hotly contested with strong entrants like Canvas and OpenClass and well-established competitors like Desire2Learn so I don’t now how well Sakai (even with all the 2.9 gooey goodness) will be able to gain market share. But I do think that there is an amazing un-met need for Sakai outside North America. Outside North America, I see the primary market players as Learn, Sakai, and Moodle.

If you look at the market where Learn and Moodle are the only significant players, I think that Sakai 2.9 has a lot to bring to that market. I think that Moodle and Learn have their strengths and weaknesses and I think that Sakai 2.9 is strong where Learn and Moodle are weak and that Venn-Diagram of strengths and weaknesses leads to natural adoption and resulting market share. I am happy to talk more about this over beers about the precise areas of relative strengths and weaknesses between Sakai, Moodle, and Learn.

So to me the Golden Age of the Sakai CLE is the 2.9 (and then 2.10) release that allows Sakai to maintain or slightly grow market share in North America by winning more than we lose and dramatically growing Sakai market share beyond North America.

I also think that once we have 2.9 out the door and installed across the Sakai community, the pace of innovation in the Sakai CLE can slow down and we can focus on performance, reliability, and less visible but equally important investments on the quality of the Sakai code base. I think that we need one more major release (Sakai 2.10) to clean up loose ends in Sakai 2.9, but as we move beyond Sakai 2.9, I think we will see a move from one release per year to a release every 18 or 24 months. We will see more 2.10.x releases during those periods as we tweak and improve the code. In a sense, the sprint towards full functionality that we did in 2004-2007 and then picked back up in 2010-2012 will no longer be necessary and lead to a golden age where we can take a breath and enjoy being part of a mature open source community collectively managing a mature product from 2013 and beyond.

I am telling this same story internally within Blackboard in my role as Sakai Chief Strategist. Invest in 2.9, get it solid and feature complete and then invest in 2.10 and make it rock-rock-rock solid. Any notion of deploying scalable Sakai-based services in my mind takes a back seat to investment in improving the community edition of Sakai in the 2.9 and 2.10 releases. I am not taking this approach because Blackboard has a long history of charitable giving. I am taking this approach because I see this approach (fix the code before we deploy anything) as the way to maximize Sakai-related revenue at Blackboard while minimizing Sakai-related costs. Even though Learn, ANGEL, and MoodleRooms are my new colleagues at Blackboard, I am hopeful that while any Sakai business that Blackboard undertakes will likely not be Blackboard’s largest line of business, I want Sakai to be the most profitable line of business in the Blackboard portfolio so I end up with enough to fund tasty steak dinners and plenty of travel to exotic locations :)

Sakai OAE and Sakai CLE Together

There has been a testy relationship between the Sakai OAE and Sakai CLE community since about 2008. Describing what went wrong would take an entire book so I won’t try to describe it here.

The good news is that when the Sakai CLE TCC was formed in 2010, it set the wheels in motion for all of the built-up animosity to go away in time. At the 2011 Sakai conference there was a few flare-ups as folks in the OAE community needed to let go of the notion that the Sakai CLE community were resources that should be controlled by the OAE management.

The great news is that in 2012, everything is as it should be. The Sakai CLE and Sakai OAE communities see themselves as independent peers with no remaining questions “who is on top” or “who does the Sakai board like best”. Not only have all of the negative feelings pretty much become no more than background noise, there is increasing awareness of the interlinked nature of the CLE and OAE. The OAE needs the CLE to be successful to maintain the Sakai presence in the marketplace while OAE matures and the CLE forms the basis of the OAE hybrid mode so the more solid the CLE is – the more successful the OAE will be.

While I want to the CLE to be quite successful and have a long life, its founding technologies like Java Server Faces, Hibernate, Sticky Sessions, Iframes, and a host of other flaws mean that it is just not practical to move the CLE technology to the point where it can be a scalable, multi-tenant, cloud-based offering without a *lot* of care and feeding. The OAE is a far better starting point to build such a service given that it is starting much later (i.e. 2008 versus 2003). The OAE was born in a more REST-Based cloud style world. Sometimes you need a rewrite – and history has shown (in Sakai and elsewhere) that rewrites take a long time – much longer than one ever expects. The community has wisely switched from seeing the CLE as resources coveted by the OAE and instead seeing investment in the CLE in buying time for the OAE work to finish taking as much time as is needed.

The only bummer about this year’s Atlanta meeting was that the CLE folks and OAE folks both had quite full schedules making progress on their respective efforts so there was very little overlap between the teams. Usually when meetings at the end of the day “finish” what really happens is that the discussions continue, first in the bar, then at dinner, and then later at the bar or Karaoke. Because the CLE and OAE meetings were on different tracks, there was no where near enough overlap in the dinner and beer conversations. I think that at next years meeting we will address that issue.

Sakai + jasig = Apereo

Wow this discussion has been going on for a long time! The good news is that we seem to have very high consensus on all of the details leading up to the moment where the two organizations become one. It feels like we are down to crossing the t’s and dotting the i’s. It will still take some time to do the legal process – but those wheels are now started and I am confident we will have Apereo by Educause this year.

This is a long time in coming. Joseph Hardin and I had a discussion back in 2005 before we created the Sakai Foundation as to whether we should just join jasig instead of making our own foundation. We dismissed the notion because back then it was clear that we needed a focal point to solidify the definition of Sakai and what it was and the Foundation was a way to help make that happen and create a world-wide brand.

The decision to start our own foundation and not join jasig had its advantages and disadvantages.

We certainly advanced the Sakai brand with an active and visible board of directors and full-time executive director in the form of first me and then Michael Korkuska. We were able to come together and engage and “defeat” Blackboard in the patent war of 2006. We had well attended bi-annual conferences that later became once per year out of financial necessity and grew a series of regional conferences around Sakai as well.

But with all those advantages there were some massive mistakes made because the Sakai Foundation ended up learning a few hard lessons that jasig had frankly already painfully learned several years earlier. And sadly those lessons took a long time to learn and caused significant harm to the Sakai Community. The very board of directors that was empaneled to nurture and grow the community, by the middle of 2009, were the greatest risk to Sakai’s long-term survival.

I won’t go over all the mistakes that the Sakai board made between 2008 and 2011 – that would take an entire book. I will just hit the high points:

When your funding source is higher education – money does not grow on trees. The Sakai *project* in 2004-2005 was funded by large grants and large in-kind contributions and handed the Foundation a $1Million dollar surplus. The annual membership revenue peaked in 2006 and has fell steadily ever since. Here is one of many rant posts where I go off on the financial incompetence of the Sakai board during that period:

Sakai Board Elections – 2010 Edition

It literally took until March 2010 for the board to understand that it needed to live within its means so as to not go bankrupt. The jasig group learned to live within its means and align their spending with their real revenues years earlier.

The second major problem that the Sakai board has was its own sense of how much power it had over volunteer members of the community. The Sakai Board saw itself as a monarchy and saw the community as its subjects. The perfect example of the Sakai Board’s extreme hubris was the creation of the ill-fated Product Council. Again this was solved in June 2010 and now in June 2012 there is very little residual pain from that terrible decision – so we are past it.

As a board member of the Sakai Foundation in 2012, I am very proud of the individual board members and very proud of how the board is currently functioning as a body. It took from 2006 – 2010 to make enough mistakes and learn from those mistakes to create a culture within the board that is truly reflective of what an open source foundation board of directors should be.

The Sakai Foundation board has (finally) matured and is functioning very well. My board tenure (2010-2012) has been very painful and I have shouted at a lot of people to get their attention. But the core culture of the board has finally changed and it is in the proper balance to be a modern open source organization. If I rotate off the board at the end of this year or if my board position ends at the moment of Apereo formation, I am confident that the culture will be good going forward.

Why Merge?

So if everything is so perfect, why then should we merge and become two projects (Sakai OAE and Sakai CLE) and become Apereo?

Because the Sakai brand, while a strong brand and known worldwide, can never expand the scope beyond the notion of a single piece of software in the teaching and learning marketplace. The brand Sakai is successful because it is narrow and focused and everyone knows what it means. This is great as long as all the “foundation” wants to do is build one or two learning management systems – but terrible if we want to broaden the scope to all kinds of capabilities that work across multiple learning management systems.

What if we wanted to start a piece of software to specifically add MOOC-like capabilities to a wide range of LMS systems using IMS Learning Tools Interoperability? Would we want to call it Sakai MOOC? That would be silly because it would imply that it only worked with one LMS. We should call it the MOOCster-2K or something like that and have a foundation where the project could live.

The Sakai brand is too narrow to handle cross-LMS or other academic computing solutions. The jasig brand is nice and broad – but there is nothing in jasig about teaching and learning per se. So the MOOCster-2K would not fit well in jasig because it needs to be close to a community (like Sakai) that has teaching and learning as its focus.

The Apache Foundation would be perfectly adequate except that there are no well-established communities that include teaching and learning as a focus.

So the MOOCster-2K needs to make the MOOCster Foundation and go alone and perhaps take 5-8 years and perhaps make mistakes due to growing pains like both the Sakai Foundation and jasig endured. But why? Why? Why waste that time in re-deriving the right culture when all the MOOCster community wants is a place to house intellectual property and pay for a couple of conferences per year.

So we need Apereo – and we need it to be the sum of Sakai + jasig. It needs to have a broad and inclusive brand and mature open source culture throughout but also including all of the academy – both the technical folks and the teaching and learning folks and the faculty and students as well. It will take this group of people with a higher education focus to truly take higher education IT through the next 20 years if we are to make it through the next 20 years begging for scraps from commercial vendors that see higher education as a narrow and relatively impoverished sub-market of their mainstream business lines.

I come out of the Atlanta conference even more convinced of the vitality of Apereo than ever before. While there are many benefits cited for combining the organizations, having a single conference is the most important benefit of all. It was so wonderful to see all the uPortal folks in the bars and know we were all in the same building. But this was not some kind of Frankenstein conference with parts and pieces awkwardly sewn together. I must hand it to Ian Dolphin, Patty Gertz, and the conference organizers. The tracks were nicely balanced and literally we could have the conference be whatever we wanted it to be. It was so well orchestrated that I don’t think anyone would ever suggest that these two groups should ever have separate conferences from this point going forward.

Summary

Wow. Simply wow. Things in Sakai are better than they have been in a long time. Excitement is high. Internal stresses within the community are almost non-existent. The Sakai Foundation is financially stable (thanks to Ian Dolphin). Both the CLE and OAE are moving their respective roadmaps forward and rooting for each other to succeed.

Those of you who have known me since 2003 know that I do *not* candy-coat things. Sometimes when I think things are going poorly I just sit back and say nothing and hope that things will get better. And other times I come out swinging and don’t hold anything back.

The broad Sakai community is hitting on all cylinders right now. It will be a heck of a year. I promise you.