Thanks to this blog post:

http://thinkwrap.wordpress.com/2006/12/11/how-to-duplicate-cd-on-a-mac/

I now have solid instructions again as to how to duplicate an audio CD-ROM on my Mac using Disk Utility.

Thanks to this blog post:

http://thinkwrap.wordpress.com/2006/12/11/how-to-duplicate-cd-on-a-mac/

I now have solid instructions again as to how to duplicate an audio CD-ROM on my Mac using Disk Utility.

I pre-ordered my Apple iPad in that first-day flurry that allegedly sold 50,000 iPads in the first two hours. I knew I had to have the new iPad and I know the iPad will simply revolutionize everything.

But….

I have no idea what I will do with my iPad when I get it in a few weeks. I really don’t. I never saw much value in an iPod Touch – I am not crazy about music or movies – I have an iPhone and love it because it is a small device that includes data networking, E-Mail, Twitter, web browsing, Music and a Phone – all in a pocket-sized form factor. But my iPad has little of that and needs WiFi to communicate.

I sure hope I like iWork on it.

Here is something I would like – I would like it to be able to handle a bunch of PDF and HTML blobs – like all of the Python Documentation and all the JavaDoc for Sakai and my Python for Informatics Textbook and my Networks textbook by Jon Kleinberg. I don’t want to buy new books – Kindle-style – I want to read the ones I already have. And I want to browse HTML stored locally on the iPad – not over the web or via iTunes. I don’t want it so that the iPad is only useful with a network connection – I want it to be like a book that works without WiFi.

I have this deep and abiding fear that I won’t be able to just put files on my iPad – that somehow I will need to view all data through the iTunes lens or send them to myself in E-Mail as attachments. Or perhaps I need to write an iPad application called “File Folder Downloader/Reader” that is kind of like the Mac OS Finder or Windows Desktop.

I want to put my stuff on the iPad just like on my laptop. I just want to drag and drop it from one to the other and then be able to go “off the net” with my iPad and read it.

I have this big fear that while I have not jail broken any Apple product ever that I will have to Jailbreak my iPad immediately so it can store and open local files.

With all of this angst and concern inside of me, then why did I buy one within the first hour?

Uh – “Because” is all I can think of to say. Just had to have one – I will work out the details of why I want one later.

I don’t know why I am in a weird mood this morning – probably because I am writing the exam for SI502 – which of course includes a question about the request-response cycle.

I started thinking about how important the request-response cycle is to information, networks, and people these days and somehow I leapt to the idea that we needed something like the “Pledge of Allegiance” to say at the beginning of every SI502 class to reinforce this notion of the importance of request-response cycle.

Here is my first draft of the “Pledge of Allegiance to the Web”:

“I pledge allegiance to the web and the open standards upon which its built, and to the request-response cycle upon which it stands, one Internet for the greater good, indivisible, with liberty and equal access for all.”

Comments welcome.

Now back to writing that midterm exam for SI502.

I have been trying to find time to write a book about the Sakai experience. I am thinking about a book like “Dreaming in Code” but about Sakai. I will focus on the early years 2004-2007 when I was most heavily involved.

It will be a combination of historical description, open source lessons, fun anecdotes, and inside information about what it took to make Sakai happen.

I am going to try to write it as light and high level as possible to appeal to as wide of an audience as I can

I am planning on starting in earnest next week and having a draft done by the Sakai conference in June.

As part of my pre-work, I have developed a time-line that I will use to trigger my memories to write into the book.

http://docs.google.com/Doc?docid=0AbX1ycQalUnyZGZ6YjhoeHdfMjY3ZnBzdHRmaw&hl=en

The timeline is in a Google Doc – If you have any comments or memories or the answers to the questions in the document any help would be much appreciated.

I was going to write a long message to John Norman about wishing for a 2.x PMC that functioned without higher-level priority setting authority and came across this note from John to Clay and Anthony on January 24 about the Maintenance Team:

“My instinct also is to avoid formality unless it is shown to be needed. Why don’t we just proceed with these people acting in a broadly similar way to an Apache PMC (which allows for anyone to be out of contact for a period) and see if that is good enough. I’m more interested to know if the maintenance team has managed to get any bugs fixed and what issues are concerning them with regard to their mission to improve the code.”

Once I saw this, I realized that I could not say it any better than John already had said.

The message I did not send

On Mar 13, 2010, at 4:03 AM, John Norman wrote:

Personally, I see it as a valuable function of the MT to ‘tidy up’ the code base. I am not sure I care when a decision is made so long as (a) it is properly discussed and consulted on and (b) all decisions that affect a release are reviewed at the same time. So I can view this as early opening of the consultation (good) and potentially a process that allows decisions to be reviewed carefully without rushing (also good). So, while I accept Stephen’s point, I think I might advocate that we don’t wait to consider such issues, but we do insist that they be recommendations and if acted upon (e.g. for testing dependencies) they should be reversible until the tool promotion decision point.

It feels like the PM and/or Product Council should be able to help here

On Mar 14, 2010, at 5:39 AM, John Norman wrote:

I don’t see the ambition for the structures that you attribute to them. I think we are seeing incremental steps to fill gaps and respond to community needs. Of course if you don’t share those needs it may be hard to empathise and my own view may not be that of other participants in the efforts, but I have none of the intent that you seem to rail against.

Chuck’s Response:

John, the thing I rail against is the statement you made above – “It feels like the PM/PC should be able to help here”. I interpret (and I think others do as well) that statement as “perhaps the MT should get approval from the PM/PC in order to properly set overall priorities for the community”. What bothers me is that I see a PM/PC structure that seems primarily set up for Sakai 3.x having “authority” over a structure that is set up for Sakai 2.x simply because 3 > 2.

By the way – I am not sure that the MT in its current form *is* the right place for these 2.x discussions either – what I dream of is one place where 2.x is the sole focus – not four places where 2.x is partial focus. I want separate but equal – I want 2.x to be able to follow its own path without priorities and choices about 2.x being made from the perspective of “what’s best for 3.x”. I want a win-win situation not a one-size-fits-all situation.

I *do* want to make sure that things get added to 2.x in order to make 3.x happen as effectively as possible – as a matter of fact my own contributions in 2.x in the past few months have been heavily focused improved connectivity between 3.x and 2.x and I am really excited about the progress and looking forward to when 3.x runs on my campus. Even in some future state after my campus is running Sakai 3.x in hybrid mode and I am happily using it as a teacher, and perhaps I have even become an active Sakai 3.x contributor, I *still* will be opposed to structurally viewing Sakai 2.x priorities though a Sakai 3.x lens in the name of “overall brand consistency”.

And interestingly, if *I* think that the PC/PM is a group that cares mostly about 3.x and you think that the PC/PM cares mostly about 2.x – that itself is an interesting question. Particularly because you are a member of the PC and I am a confused 2.x contributor. :)

On Mar 14, 2010, at 5:39 AM, John Norman wrote:

PS I have long held that Sakai is a community of institutions, rather than a product. I think the efforts look a little different through that lens.

Chuck Wrote:

For Sakai 3.x, I think that it *is* a community of institutions and that is a pretty reasonable structure for Sakai 3.x at this point in its lifecycle – and for Sakai 2.x I think that it is a community of individuals at this point in its lifecycle and that is the perfect structure for Sakai 2.x at this point in time. *Neither is wrong* – it is the nature of the development phase of each project – and why I am uncomfortable with to our current PC/PM “one-committee-to-rule-them-all” governance (or perceived governance).

There is some recent discussion on the Sakai lists about the role of the maintenance team, product council, and product management w.r.t. Sakai 2.x. I have become increasingly frustrated about how this community treating the Sakai 2.x / Sakai 3.x work.

The thing that frustrates me the most is how there is this notion that somehow we need to alter our approaches to 2.x so as to give Sakai 3.x the greatest benefit and most resources. This is really grating to me every time I hear even the hint of a win-lose conversation about Sakai 2.x and Sakai 3.x.

The real problem in my opinion is that we have single management structures that are trying to “define Sakai”.

The real problem is that when you only have one management structure responsible for two very different efforts – it is very natural to spend some time thinking about trade-offs. And because some schools and individuals are a bit “out on a limb” with Sakai 3.x, I see a tendency for people who see themselves as community leaders conveniently conflating the “community will” with their own local risks and issues.

I understand the pressure that people who have bought into 3.x are feeling – it makes perfect sense – the whole community felt that pressure around 2.x in June 2005. We got through that and we are in a good place now with 2.x. That sense of pressure and feeling of risk is what spurs the right kind of investment. I hope that the stakeholders of 3.x see that the only way to reduce their risk must step up and really contribute resources to 3.x. Believing that somehow risk is reduced by making a “really intricate and clever management structure” is usually a recipe for failure. If folks delegate the “worry” to a management structure – they are usually disappointed. They end up with “someone to blame” but not the result they desired in the first place.

So back to my main concern at hand – “What is the right management structure for 2.x?” My primary answer is “Not the same management structure as 3.x”. I would like to see these separated and allowed to evolve to meet the different needs of their real stakeholders for 2.x and 3.x.

There will be overlap – of course with the transition and there will be plenty of folks who will be involved in both – and over time as 3.x matures – people will shift – and as 3.x matures – its own management structure will naturally adjust to meet the new realities of the problems facing the development team.

—– Here is one of my responses to a message thread about this topic

On Mar 13, 2010, at 4:03 AM, John Norman wrote:

Personally, I see it as a valuable function of the MT to ‘tidy up’ the code base. I am not sure I care when a decision is made so long as (a) it is properly discussed and consulted on and (b) all decisions that affect a release are reviewed at the same time. So I can view this as early opening of the consultation (good) and potentially a process that allows decisions to be reviewed carefully without rushing (also good). So, while I accept Stephen’s point, I think I might advocate that we don’t wait to consider such issues, but we do insist that they be recommendations and if acted upon (e.g. for testing dependencies) they should be reversible until the tool promotion decision point.

It feels like the PM and/or Product Council should be able to help here.

— Chuck Says

As I listen to this, it all simply seems too complex – particularly for Sakai 2.x. Sakai 2.x is a mature and stable open source product with solid rhythm and an annual release. There are about 20 people deeply involved in fixing bugs and moving the product forward and getting releases out. I love the notion of some strategic 2.x code cleanup and would like to see a place where the 20 people that are working on 2.x could coordinate with each other so we don’t open too many construction projects at the same time. Communication and coordination are really valuable and necessary and the folks doing the work will naturally want to talk to each other about this.

It seems like we spend way too much time debating the “purpose and authority” of the PC, PM, and MT. That alone suggests a broken structure.

I would suggest that we move 2.x toward a situation where a single named “group/committee/etc” is where 2.x decisions are made – maintenance, release, everything. Like an Apache PMC for 2.x.

If Sakai 3.x wants layers of management and multiple interlinked committees to guide its progress and a marketing plan etc etc – that is their choice. I don’t personally like that approach to software development and so I can choose not to work on 3.x. If there was a single place that 2.x was discussed – I would probably join that group as I am quite interested in Sakai 2.x for the long-term because I think that many schools will be running Sakai 2.x for the next 7-8 years and so some investment in 2.x will be warranted for some time.

I would like to see us starting to apply different approaches to structuring our 2.x effort and 3.x effort – they are at such different phases in their life-cycles and to attempt to come up with the “one true management structure for all time” – seems to be an impossible task – so perhaps we should just accept the fact that 2.x and 3.x are *different* and separate structures for each and let those structures be controlled by the people in the structures and meet the needs of the people doing the work in each activity.

/Chuck

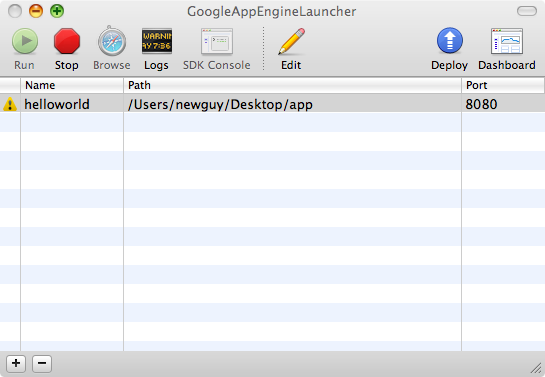

One of the more mysterious errors one gets with App Engine is when the application fails to start up with the following error:

*** Running dev_appserver with the following flags: --admin_console_server= --port=8080 Python command: /usr/bin/python2.6 Traceback (most recent call last): File "/Applications/GoogleAppEngineLauncher.app/Contents/Resources/ GoogleAppEngine-default.bundle/Contents/Resources/google_appengine/ dev_appserver.py", line 68, inrun_file(__file__, globals()) File "/Applications/GoogleAppEngineLauncher.app/Contents/Resources/ GoogleAppEngine-default.bundle/Contents/Resources/google_appengine /dev_appserver.py", line 64, in run_file execfile(script_path, globals_) File "/Applications/GoogleAppEngineLauncher.app/Contents/Resources/ GoogleAppEngine-default.bundle/Contents/Resources/google_appengine/google/ appengine/tools/dev_appserver_main.py", line 66, in from google.appengine.tools import os_compat ImportError: cannot import name os_compat

If you look through the forums there is a lot of talk about Python 2.5 or Python 2.6 and suggestions to go back to Python 2.5 – that seems not to be the problem at all because it fails in 2.5 as well with the same error.

I have carefully reproduced the problem and it is when a non-administrator person tries to run the App Engine Launcher. The fix is to simply make the person an administrative user. And here is the strange thing – once you make the use an “Allow to administer this computer” user and start App Engine once as an the user with admin powers and then log out and take a way admin powers and then log back in – then it works! Crazy.

This problem is generally reported on Mac OS 10.6 versions.

Now after an hour of debugging and researching, I can get back to recording those “introductory – see how easy this to install” videos :)

I started a tradition last year to try to spend Spring break coding somewhere. This year thanks to gracious support from JISC to participate in the JISC Dev8D Developer Days in London, I had airfare to Europe. I decided to add some pre-and-post travel at my own expense to touch base with some collaborators in Europe. It was a wonderfully productive trip – here are the details.

I departed for Zurich on February 20, arriving on Sunday Feb 21 – in order to save costs, I stayed with my good friend Dave LeBow in Zug and commuted to Zurich each day.

Monday February 21

I spent today at the University of Zurich working with the OLAT (Online Learning and Training). I worked with Joel Fisher, Guido Schnider, Florian Gnagi, and others. I had built a simple OLAT Course Node extension for IMS Basic LTI which we cleaned up and checked into the 6.4 Branch of OLAT for the next release.

Tuesday February 22

I spend most of the day in Zurich with the OLAT team and gave a talk on Basic LTI featuring Marc Alier’s Dinosaur video. We finished the LTI Course Node and talked about Common Cartridge and QT 2.1 plans. That night I hopped an EasyJet flight to London.

Wednesday February 23 – Saturday February 27

I already blogged about my Dev8D experiences.

Generally, I was going all-out every day and every night – I gave a new presentation three of four of the days of Dev8D and was fixing / documenting something nearly constantly. My most fun talk was my “Informatics: The End of Dilbert” lightening talk – which I had been afraid to give because was my thoughts were still a bit unformed and I thought it might be controversial. But I figured “what the heck” and the crowd seemed to like it.

Sunday February 28

Travelled to Cambridge – Talked to Ian and John about Sakai 3, the Sakai board, and other stuff. It was nice to catch up in person. I apologize to their families for taking them away to the Eagle pub on a Sunday afternoon.

Monday March 1

Visited Matthew Buckett at Oxford. We reviewed their Sakai installation with Hierarchy – very nice and very very cleverly done with surprisingly simple modifications to Sakai. Shows the flexibility inherent in Sakai 2’s underlying approach. Matthew also showed me their mobile portal – m.ox.ac.uk – it is done in DJango and uses a very nice architecture. I hope to get University of Michigan interested in being a partner in the effort when Oxford open sources it all in a few months.

Tuesday March 2

Went to the Open University at Milton Keynes – to visit with all my pals there and the OLnet team. I may get some funding to work with Open University folks such as the Cohere Project that is building a tool to build concept maps of web content. Cohere is a Firefox Plugin – kind of like CloudSocial – but further ahead and more practical. I may also do some other things like a CC authoring program or Basic LTI in their production LMS.

Wednesday March 3

Visited my friends at the Open University of Catelonia (UOC) Campus Project and got some updates. We talked about Open Social and their recent integrations that made MediaWiki and WordPress into OKI Bus tools. It was really clear that there architecture had morphed to become very similar to IMS Basic LTI and that it would be a simple matter to make a Basic LTI Producer for these tools quite easily.

Here is a cool video taped in 2008 in an earlier visit to UOC: Campus Project Overview

Thursday March 4

Went to visit my Marc Alier and Jordi Piguillem at the Polytechnic University of Barcelona (UPC). There I also met Nikolas Galanis who had done the most recent work on the Basic LTI support for Moodle 1.9 – it was in good shape but we put the finishing touches on it to bring it up to par with the other Basic LTI Consumers. Thursday evening I ended up at the Universal Disco to spend a few hours to catch up with Lluis Vincent of LaSalle University.

Friday March 5

We a taped a podcast for Marc’s “Bite of the Apple” podcast and talked about things like the history of technology before Windows, Linux and Mac OS/X in the 1990’s and how standards and interoperability he been an important part of how we got where we are. Later Nikolas and I continued to clean up the Moodle 1.9 Consumer.

Saturday March 6

I continued to work on Moodle from my hotel room doing cleanup and then using it to test me IMS Certification for Basic LTI Tool Consumers and produced this video:

http://www.vimeo.com/9957979

After three days of coding on Moodle, I gave myself a little “treat” by renting a scooter for a few hours and seeing if I enjoyed it. The scooter was fun but a little scary. I had one close call that was enough for me to decide (at least for now) – no more scooters for Chuck in Barcelona.

After I came back from scootering, I decided to fix a few Sakai bugs in the JSR-168 portal which were identified during the JISC Dev8D meeting and get that taken care of while it was all fresh in my mind. I finished the code, tested things, checked in my fixes and went to bed.

Sunday March 7

The flight back was pretty grueling – I had kind of worn myself down over the two weeks by eating too much rich food and not getting enough sleep. I felt pretty queasy all the way back. But I got back in one piece and fell into bed.

Monday March 8

I woke up with a splitting headache and had to teach my SI502 class at 8:30 AM. I made it through the class and took a nap in the afternoon. But then I could not get the idea of a WordPress plugin that I saw at UOC out of my head so I started coding, imitating the UOC code. In four hours where I kept having to take little naps because I felt so woozy, I had a working prototype of a Basic LTI Producer for WordPress which I gleefully sent around screen shots and the URL access information for the Basic LTI tool and quickly got confirmation that it was working from Desire2Learn, Moodle, and Jenzabar.

Tuesday March 9

Antoni Bertran of the Open University of Catalonia Project quickly imitated the patterns in my WordPress Basic LTI Producer and wrote his own Basic LTI Producer for MediaWiki and released the source code.

Wednesday March 10

I got Antoni’s MediaWiki plugin installed and running on one of my servers for folks to test and of course it worked great in Desire2Learn, Sakai, Moodle and Jenzabar (this interoperability stuff is starting to feel really fun). I also helped Steve Swinsberg of Australia National University improve the Sakai Basic LTI Producer so he can plug Sakai tools into uPortal using BasicLTI.

I was feeling well enough to eat solid food again on Wednesday.

Thursday March 11

I think that I am caught up and ready to go back to programming being a background activity. It was fun to drop everything else and purely code for 2+ weeks and do so with my best friends and collaborators around the world.

In summary, I visited two countries, six cities, wrote and committed code in three different open source projects, found the coolest new Basic LTI tools so far (MediaWiki and WordPress).

It just goes to show that sometimes doing a bit of wandering around can result in some really cool things. I wonder how I can top it during next year’s spring break.

programmer_mode = off teacher_mode = on

I seldom blog about my oldest daughter Mandy – she is a bit of an online recluse so I have always respected her privacy. But somehow she found a prank call to Fashion bug in 2008 on YouTube where she was the one who was on the receiving end of the prank. I thought it was funny – so I figured I would share.

http://www.youtube.com/watch?v=rwS77fXgsNI

I have to agree with one of the commenters in the YouTube Video,”Wow. That girl at Fashion Bug kind of pwn’d you guys…”. Smoothly done. Dad is proud.

I came to Dev8D in London with two tasks in mind. First, the IMS Global Learning Consortium had just released the public draft of the IMS Basic Learning Tools Interoperability specification and I needed to come up with some materials to help developers understand and use the specification. My second goal was to give a workshop based on my O’Reilly book titled, “Using the Google App Engine”. This was my first Dev8D experience so I was not sure what to expect.

This is one of many Dev8D happy stories. You can visit the Dev8D wiki see the other Dev8D Happy Stories.

Like any good procrastinator, I was putting the finishing touches on my PHP sample application for IMS Basic LTI on the flight and train ride into London. I knew it would be a bit rough around the edges but since I had a free laptop and free iPod as inducements to get folks to try the software out, I figured I would have some takers who would help me work out the kinks and I could work on things throughout Dev8D.

On the first day, I spent most of my time either in the Base Camp or in the Expert talk area. When I gave my first expert talk, my job was to do a sales pitch to the developers in the audience to convince them that my bounty (building the best LTI tool) was (a) the best bounty and (b) I would be able to help them succeed. Since I had just finished the sample code the night before, I did not have slides ready to describe my sample code – so I have a general talk about how awesome Basic LTI was in general and promised to have a tutorial ready for tomorrow. I told them to download the code and start hacking. It turned out that a few of the attendees already worked on their campus VLE and a better way of plugging in an external tool was quite attractive to them o we had a few programmer teams off and running.

I did not want to disappoint “Team Tools Interoperability” so I worked late on Wednesday night to build a set of slides on how to work with the sample code for my Thursday expert talk. On Thursday morning, I started talking with some of the programmers and explaining things and decided that I needed to add a feature to my sample application (Classified ads) and then add more slides to my tutorial just in time for my afternoon expert talk on Learning Tools Interoperability.

I decided to sign up for a 10-minute lightening talk about “Teaching Programming to non-Programmers” – this is something I think a lot about and get “a little cranky from time-to-time” I have written a few blog posts expressing my frustration abut the way we teach the first “programming class” but had been nervous about giving a talk about the topic (which is a bit critical of the status quo).

I did not have enough time to make slides for my lightening talk so I just scribbled down some rough notes and started talking off the cuff. It seemed like the audience was not going to lynch me so I kept talking about increasingly non-status-quo radical ideas bout what was wrong with the current approach and how I saw it differently in the future. I just said what I felt about the matter using very direct and risky language and let the chips fall. By the end, I figured I had so much that I would be escorted from the premises.

When I stopped talking, I waited for the questions or criticism. But instead people started telling me their own stories about their own frustration with the status quo in teaching about technology. These conversations continued into the evening party. At some point in these conversations, I realized that I was among people who had made it through the educational system and came through on the other side. They could see that programming, networks, data, technology, etc was a wonderfully creative place to be and a lot of fun. And most of the folks wish that lots of other people could share the fun we were all having. I was among my own kind – those who think of computing and technology as a form of creative expression.

But there was no time to rest – I had my Google App Engine Workshop to give – so I again on Thursday night I stayed up late getting prepared for the Friday workshop.

On Friday morning morning, both of the teams working on Basic LTI had found a bug in the Moodle support for BasicLTI. I had meant to test it out myself – but ran out of time – so we sat down Friday morning and found and fixed the code. Steve Coppin had already found and fixed one bug in the Moodle code (the hard one) and sent it to the Moodle Basic LTI team. I helped Steve find the last (easy) bug and got all the teams going again.

In the afternoon on Friday, after a bit of a scare getting my handouts printed (the University of London Union staff totally saved me!) and finding a Mac Air VGA adapter (yikes!) things settled down and my workshop started.

Since most of the folks at the meeting were geeks – getting software installed and the simple demos working was a piece of cake. I quickly sold the books I had brought over – so I had enough money for cab fare for my return. We cruised through the first 3 hours of the lectures and hands-on exercises having a great time it was fun to teach a new language to existing programmers – we could move pretty quickly.

Then we got to Chapter 8 about how App Engine does not support relational data but instead the Datastore is sorted, sharded data aimed at quickly reading through large amounts of data. As I was describing the advantages of Data Store – the crowd started to get a bit “restless”. They started thinking that App Engine was kind of crappy and started asking even more tough questions. I started to defend App Engine and point out its strengths and we moved into a free flowing discussion that went from search engine architecture to the future of computer science – reacting to the in-depth questions made me think a lot on my feet about “how” and “why” and “what this all means in the grand scheme of things”.

The group discussion that ensued was lovely – and by the end, I think that we all learned something by having our collective thinking bent one way and then another exploring this we technology and approach to data storage. I now I learned plenty during the impromptu “debate” part of the evening.

The awards dinner on Friday was great fun – you could start to see the JISC leaders and organizing committee relax a bit – we were past the half-way point and everything was going fine.

Saturday morning, I no longer had any more formal duties so I figured I would relax and judge the Basic LTI competition for the “bounty”. There was some space in the Arduio hardware workshop so I decided to sit in and learn how to teach using Arduino. I was having great fun learning Arduino and making an LED blink on and off when Tobias Schiebeck of Manchester came in because he was having trouble plugging Access Grid into Sakai using JSR-168 and he thought there might be a bug in JSR-168. He showed me what was happening and it looked like a bug so I stepped out of the Arduion workshop to look at it more thoroughly.

I had written the support for Sakai for JSR-168 back in 2005 and the bug was something I sort-of remember in the back of my mind as something that I had never fully tested. It had to do with sessions of different scope in JSR-168. Now he was getting stuck – his application was working but there was some yucky text on the screen because of the bug. He had even fixed one bug and then found another bug and had sent a note to the developers list some time ago which somehow I had missed. So we started to jointly hack on Sakai’s JSR-168 support. I wanted to figure this out once and for all and get it fixed right. Tt was great to have Tobias sitting next to me who (a) needed the fix, (b) knew Sakai internals pretty well, and (c) would help me work through the issues, and (d) would use the fix immediately.

We started about 10 AM – it was slow going at first because I had not touched the code in five years – but Tobias knew the code well enough to help me start to remember things. We quickly narrowed the problem down to the code he had patched – but his patch was not enough. So we just kept digging – we broke for a quick lunch and got back to coding and debugging. I kept being pulled out to judge the prizes and other things but would always come back and code more. We worked through the awards ceremony and it started to feel closer and closer. ANd finally I realized what was going wrong at a high level and started to narrow down the problem and solution. We started trying things and we were both testing and editing our own copy of Sakai at the same time. I got closer and closer – but finally Tobias had to leave to catch his 6PM train. I told him to save his version of the code and pack up while I ran one more test that I thought was finally going to make it work. He stood there as my Sakai instance restarted and after a few minutes we ran the test and realized that had the fix. Tobias was quite happy – as he ran to the train to go home to Manchester. In four hours, together we had cracked a bug that had eluded me for five years hidden deep in the code of Sakai.

By the time we were done, the room had become empty – and the staff were cleaning up the papers – and had taken away our power plug – and the food was gone – but we got the bug fixed and finished and TObias made his train with five minutes to spare!

Back to the main reason I came to Dec8D, the IMS Basic LTI prize winners were great – they had built and integrated their tools into Moodle, Sakai, Blackboard, and WebCT using Basic LTI in less than 48 hours. I was really proud. One of the projects integrated an existing reading list application and the other used Apache Wookie to plug widgets into a learning management system using Basic LTI.

Afterwards, I talked to both teams about helping work on and support the Moodle Basic LTI software and I think that this might work out.

So in summary, I cam with two ideas in mind and ended up doing a lot of things that just popped up as an opportunity. I worked on code from five years ago and worked on code that will be in the next release of Moodle – I did a bit of teaching and a lot of learning. A big “thanks” to Dan Hagon, Scott Wilson, Mark Johnson, Steve Coppin, and Ben Charlton.

I will write a separate blog post with screen shots of the software when I get more time! We awarded the Asus EEPC and iPod provided by Blackboard and gave away IMS T-Shirts – I was quite thankful as my luggage would be much lighter for the rest of the trip!

Being in the same rooms for four days with such smart people in a completely supportive environment (with plenty of snacks) was like several weeks of working by myself in terms of the variety of things I worked on. I made progress on so many fronts and had such a great time doing it.